January 16, 2020 / by Giorgia Cantisani / SONG

Investigating the shared neural foundations of speech and music perception

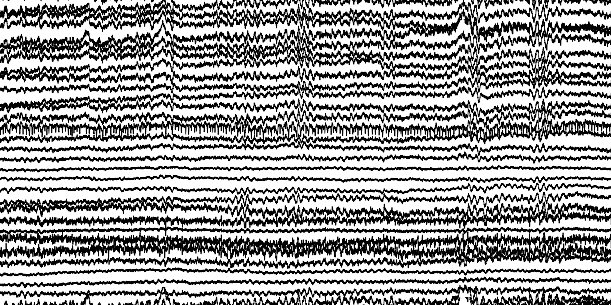

Music and speech are forms of communication special to humans, and their perception and production underly remarkable abilities of our brain. Previous research suggested that the low-level speech and music acoustic signals are transformed into high-level concepts by means of a hierarchical neural system. However, despite evidence for shared and parallel mechanisms for speech and music processing, the two phenomena have been largely investigated separately. In this project, we will investigate the shared neural mechanisms of speech and music perception in ecologically-valid scenarios by analyzing behavioral and multivariate neural data with signal processing and machine learning methods. Specifically, we will probe speech and music processing simultaneously by considering the case of sung speech perception, a scenario engaging both processes.